Bayesian Detector Combination

Object Detection with Noisy Crowdsourced (Multi-Rater) Annotations

Zhi Qin Tan 1 Olga Isupova 2 Gustavo Carneiro 1 Xiatian Zhu 1 Yunpeng Li 1,3

1 University of Surrey 2 University of Oxford 3 King's College London

European Conference on Computer Vision 2024

Abstract

Acquiring fine-grained object detection annotations in unconstrained images is time-consuming, expensive, and prone to noise, especially in crowdsourcing scenarios. Most prior object detection methods assume accurate annotations; A few recent works have studied object detection with noisy crowdsourced annotations, with evaluation on distinct synthetic crowdsourced datasets of varying setups under artificial assumptions. To address these algorithmic limitations and evaluation inconsistency, we first propose a novel Bayesian Detector Combination (BDC) framework to more effectively train object detectors with noisy crowdsourced annotations, with the unique ability of automatically inferring the annotators' label qualities. Unlike previous approaches, BDC is model-agnostic, requires no prior knowledge of the annotators' skill level, and seamlessly integrates with existing object detection models. Due to the scarcity of real-world crowdsourced datasets, we introduce large synthetic datasets by simulating varying crowdsourcing scenarios. This allows consistent evaluation of different models at scale. Extensive experiments on both real and synthetic crowdsourced datasets show that BDC outperforms existing state-of-the-art methods, demonstrating its superiority in leveraging crowdsourced data for object detection.

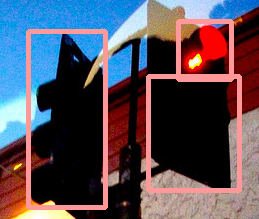

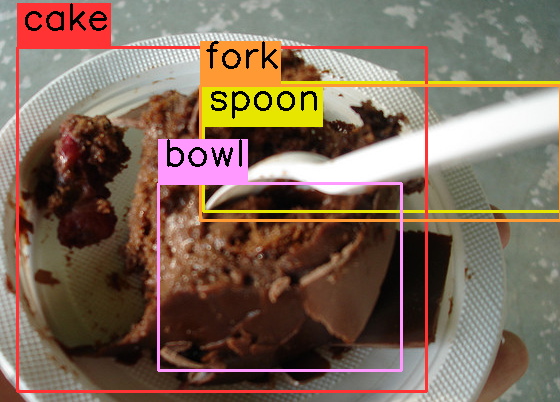

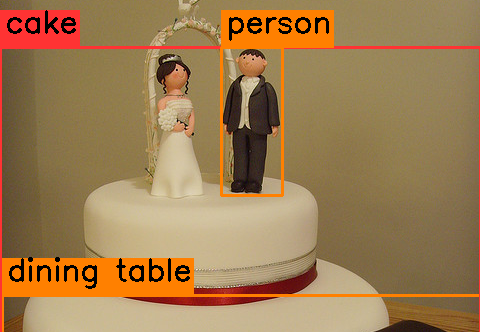

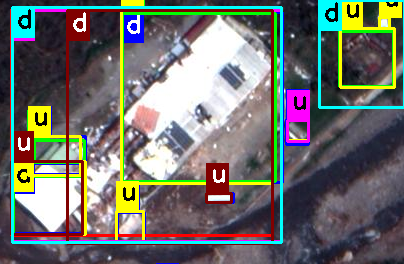

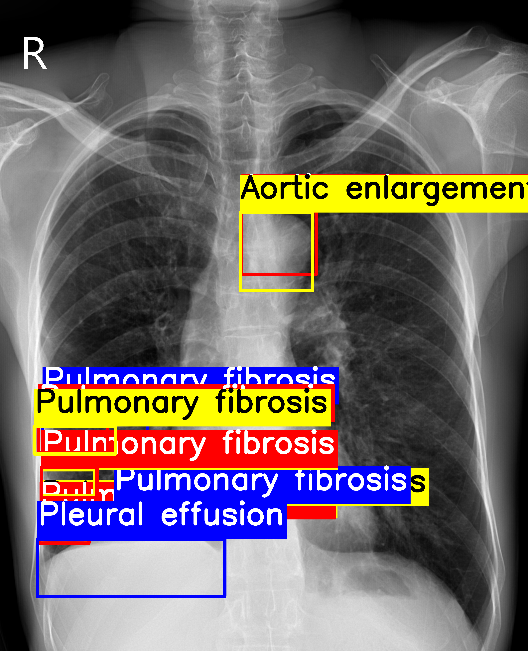

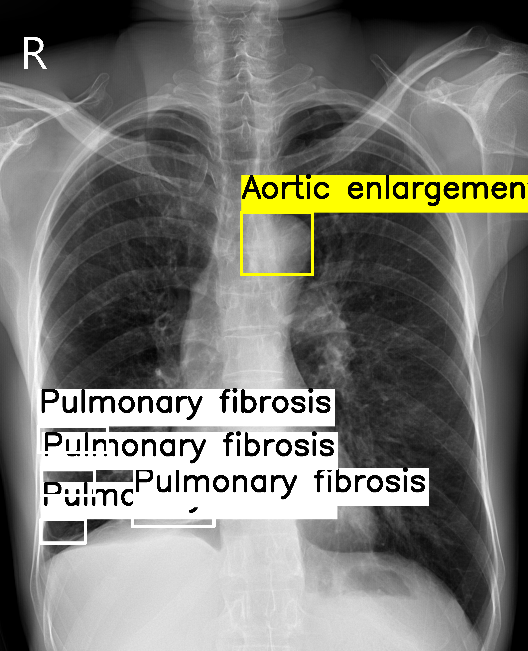

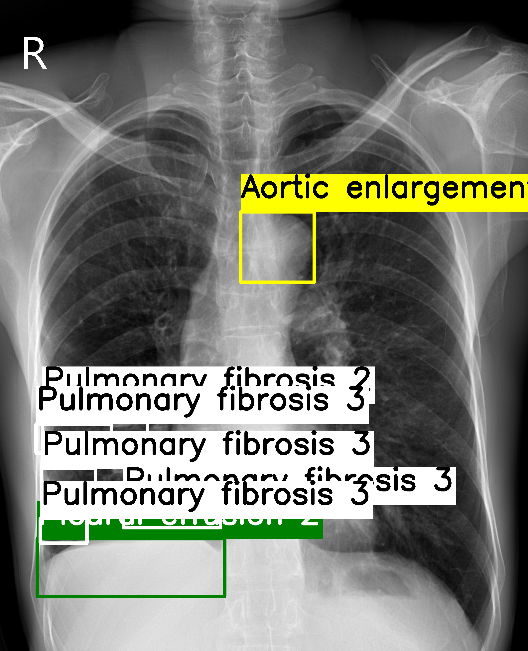

Noisy Crowdsourced (Multi-Rater Annotations)

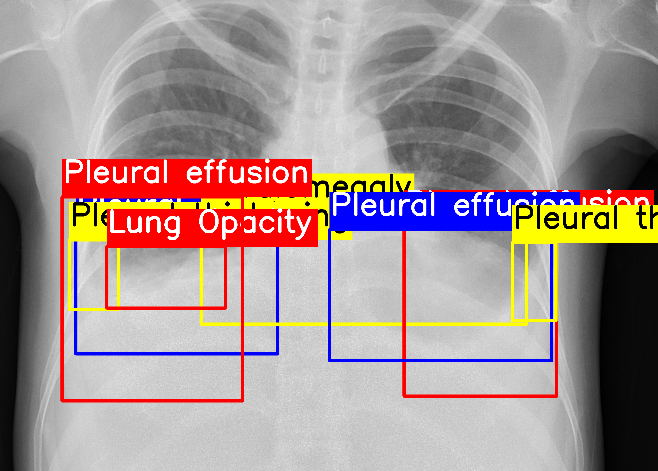

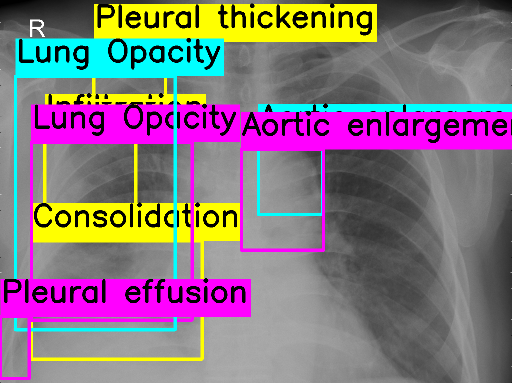

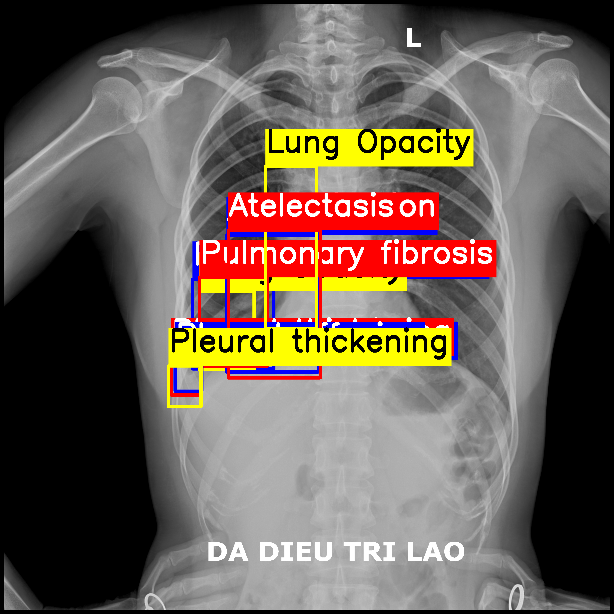

Accurate object annotations are often difficult and expensive to obtain. Researchers typically resorts to collecting crowdsourced or multi-rater annotations and perform aggregation to obtain better ground truth for model training. However, acquiring fine-grained object detection annotations in unconstrained images is time-consuming, expensive, and prone to noise. These challenges are especially significant in complex domains such as dental radiograph images, where interobserver variability and disagreement among expert annotators make it difficult to achieve unanimity.

This results in multiple noisy object annotations originating from different annotators, i.e. multi-rater problem in object detection.

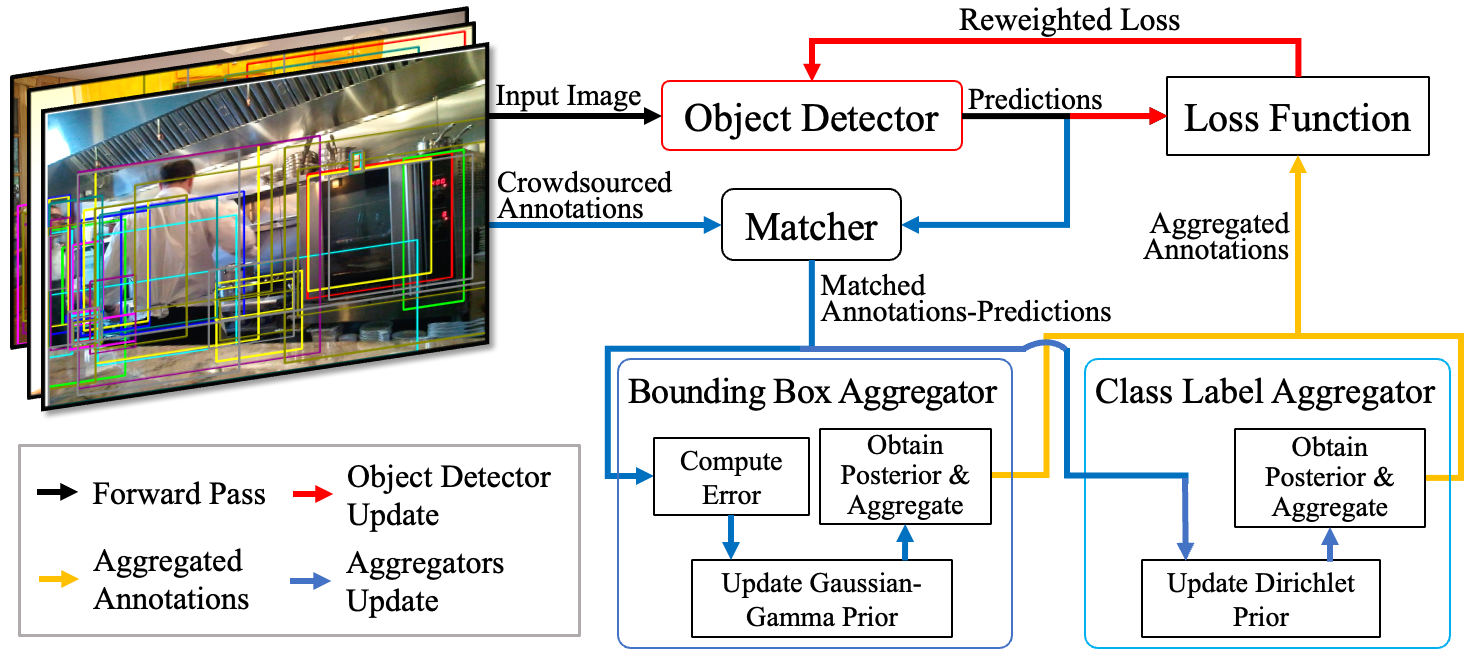

Bayesian Detector Combination

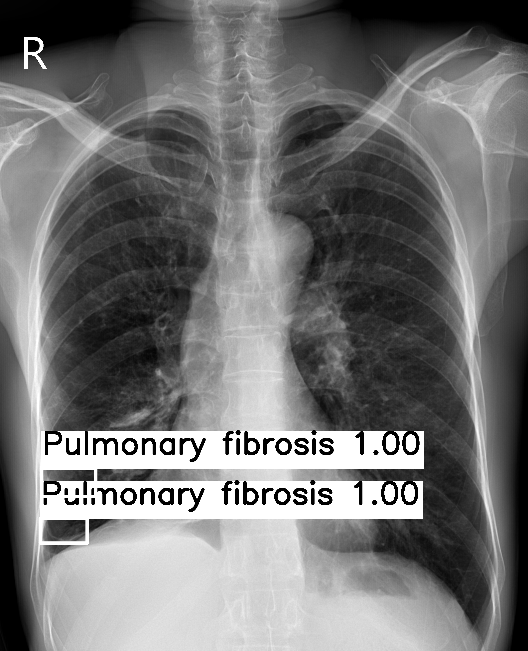

We propose a novel Bayesian Detector Combination (BDC) framework to more effectively train object detectors with only noisy multi-rater annotations, with the unique ability of automatically inferring the annotators’ label qualities. Unlike previous approaches, the proposed method is model-agnostic, requires no prior knowledge of the annotators’ skill level, and seamlessly integrates with existing object detection models.

At a high level, BDC models each annotators’ annotation accuracy in terms of bounding boxes as a Gaussian distribution with a Gaussian-Gamma prior and class labels as a multinomial distribution with a Dirichlet prior. During model training, we perform a many-to-one matching of each annotator’s annotation to the model output via a simple heuristic matching rule. The annotation-prediction matches are then used to update each annotators’ prior distributions with the mean-field variational Bayesian method. Then, the updated posterior distributions are used to aggregate all annotations matched to the same prediction to learn the object detector’s parameters. The process of optimizing the object detector’s parameters and updating the prior distributions is repeated iteratively until convergence.

Experiments Results

To demonstrate the superiority, robustness and generalizability of BDC, our experiments span across three popular object detectors and includes several natural images and medical images datasets. Our extensive evaluation showed that BDC outperforms prior methods with negligible computation overhead, improving detection accuracy up to 12.7% AP50 when ground truth is available. Please see the paper for the full results.

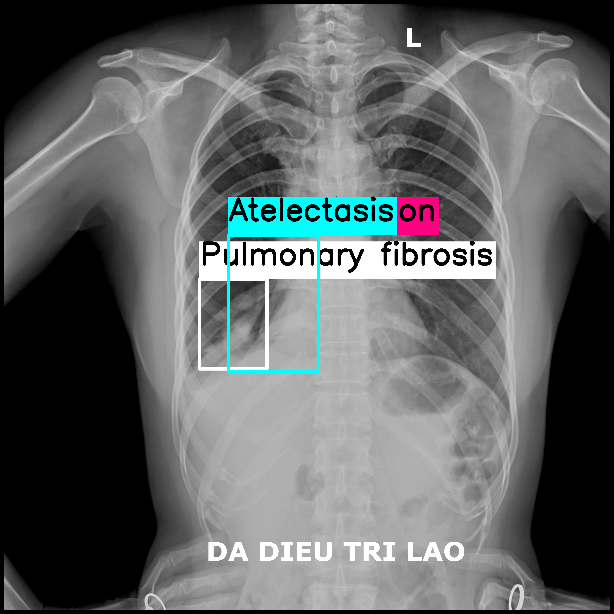

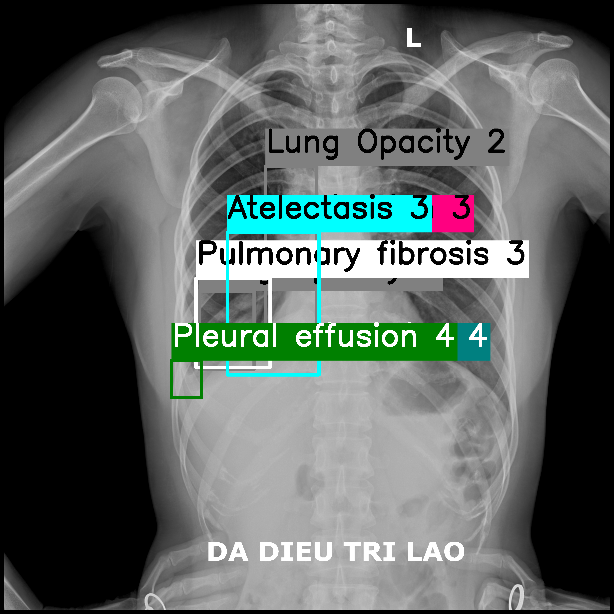

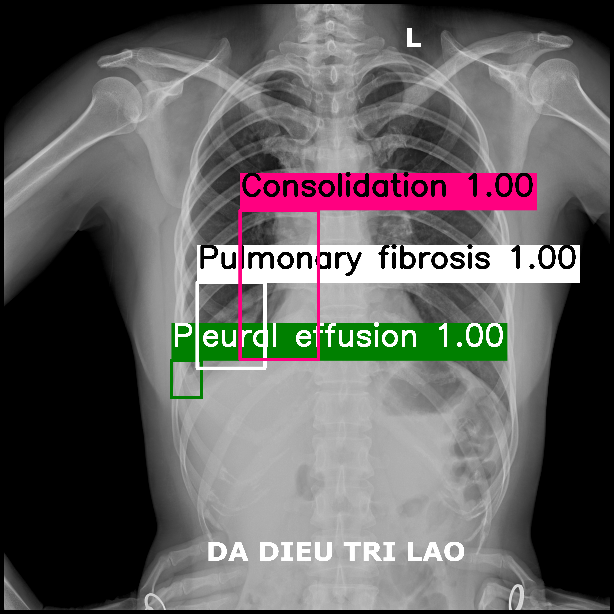

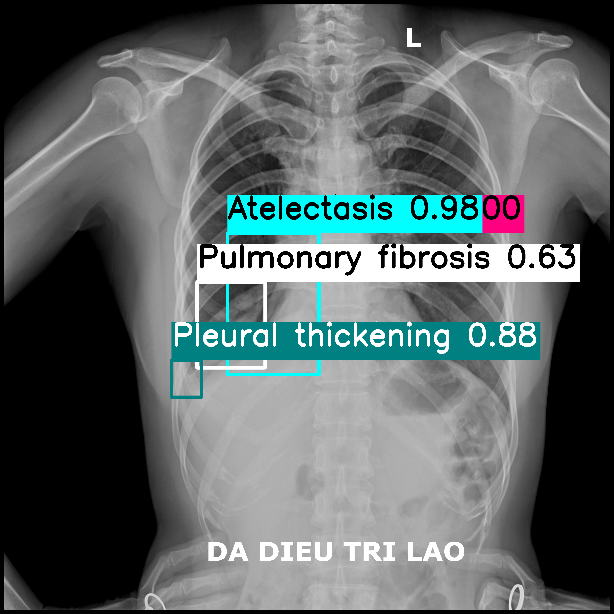

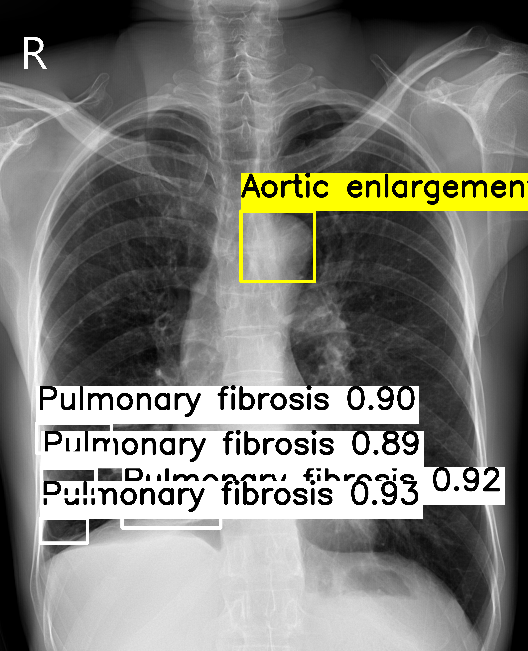

VinDR-CXR

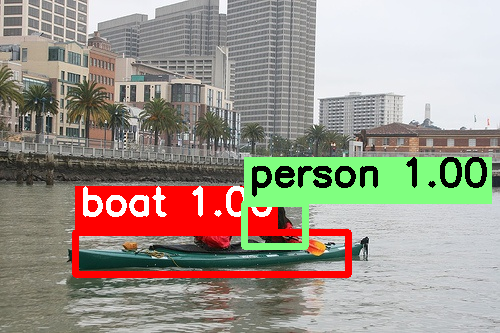

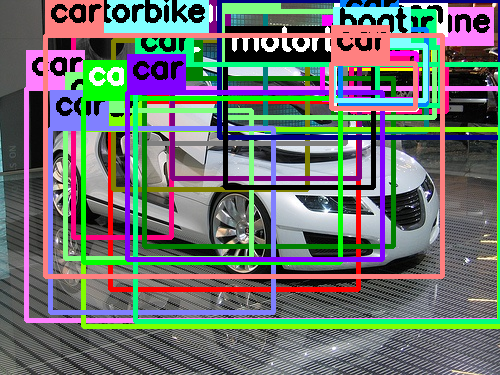

VOC-MIX

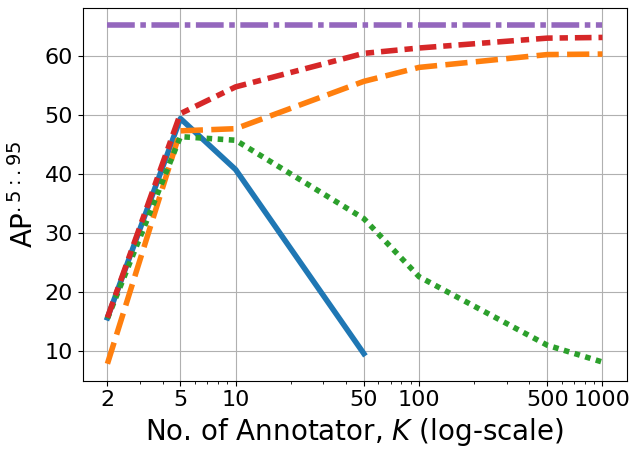

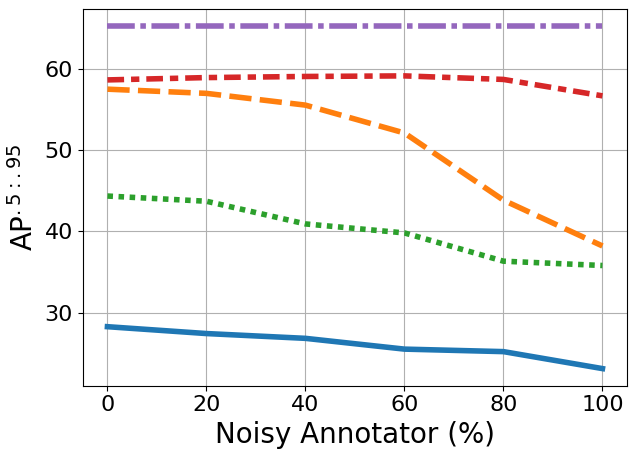

Scability and Robustness

We analyse the scability and Robustness of our method by varying the number of annotators and changing the percentage of noisy or poor-performing annotators. Our method consistently outperform other alternatives under all scenarios.

Presentation

Poster

BibTeX

@inproceedings{bdc2024tan,

title = {Bayesian Detector Combination for Object Detection with Crowdsourced Annotations},

author = {Tan, Zhi Qin and Isupova, Olga and Carneiro, Gustavo and Zhu, Xiatian and Li, Yunpeng},

booktitle = {Proc. Eur. Conf. Comput. Vis.},

pages = {329--346},

year = {2024},

address = {Milan, Italy},

}